Exploring Complexity in Qualitative Research: Designing a System for Collaborative Analysis

Agency by Design research assistant Sarah May explores the complex nature of working with qualitative data based on her experiences collaboratively coding and analyzing AbD’s interview transcripts.

Greetings! My name is Sarah and I’ve been an AbD Research Assistant, along with my coworker Amy, since October 2014. Although not active on the blog, Amy and I have been hard at work processing and analyzing the AbD interview data, which you may have read a bit about here.

The AbD team members spent the past couple of years interviewing maker education practitioners and thought leaders in a variety of educational contexts. Although we’ve mentioned the interview strand of our research in our very first blog post, and we touched on some emergent findings from these interviews in our white paper, we haven’t spent much time sharing what this interview strand process really looks like. Part of our hesitancy may have stemmed from the fact that this work has been ongoing and iterative. We’ve been charged with the complex and time-consuming task of analyzing qualitative interview data. As we know, however, this complexity is nothing to shy away from. So what did this process look like?

At the outset, analyzing these interview responses was a challenge. Much of the beauty of human language comes in its flexibility and fluidity. Two interviewees may have used the same word, but in different ways. Others may have used different words to describe the same idea. In fact, the interviews we read were complex, nuanced, and deeply insightful. This aspect of language, as beautiful as it is, becomes a great challenge in qualitative studies. As researchers in this work, Amy and I were faced with a design problem: How do you set up a system between researchers to talk about language and ideas that don’t have a set common understanding?

Initially, we knew that one major goal of this system would be to organize the data in a sensible, rigorous way. We needed to develop a way to code interview responses into categories by common themes—that is, how could we label different sections of these interviews so that we could start to see where similar ideas were cropping up? As in any research project, Amy and I also had to work to make sure we coded interview responses with a high level of reliability—that my understanding of someone’s response to a question matched with Amy’s, and that we both agreed on which code best captured the idea expressed.

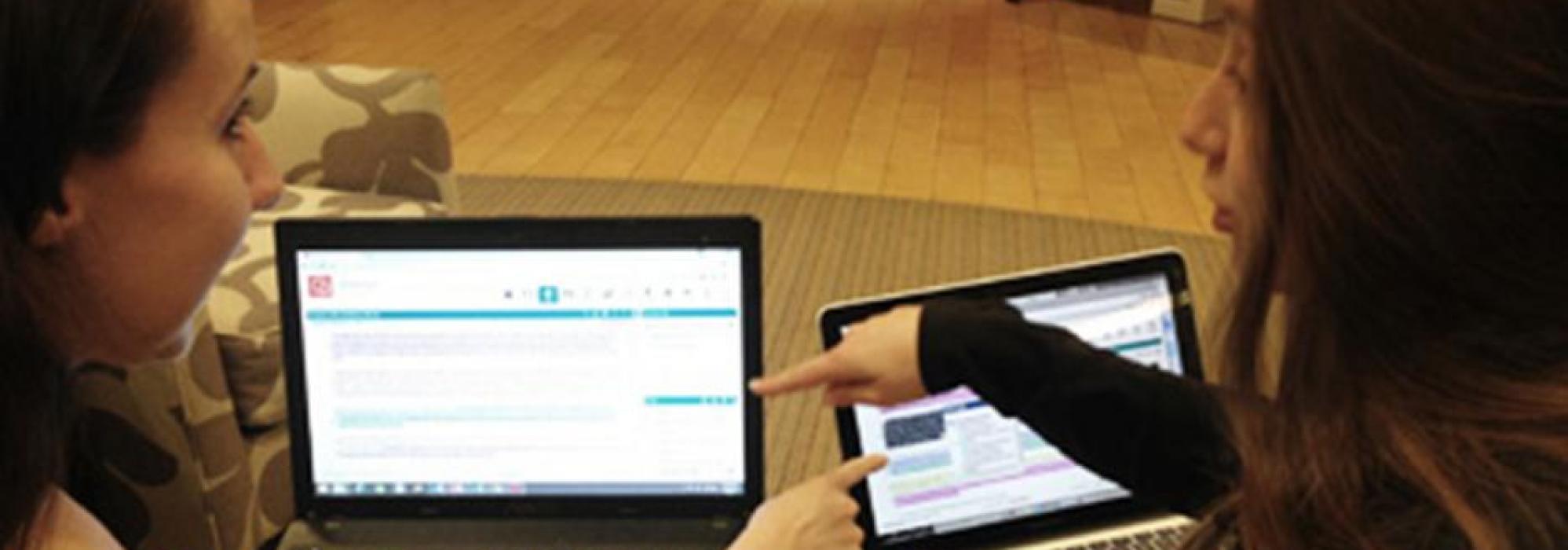

To accomplish these goals, Amy and I went through a series of steps. Our system was structured around a codebook—a list of codes developed by the AbD team—that formed the skeleton of our coding process. Some of these codes were chosen beforehand (our etic codes), based on research questions. Other codes were developed after getting to know the interview data and seeing what themes emerged (our emic codes). To apply the codes, Amy and I would each split up, read an interview, code it ourselves, and make note of any questions we had along the way. Then we’d come together, camp out on a couch in the library, and spend hours reading each other’s coded interviews and talking through the codes we had applied. To make sure we were staying true to the codes and true to the interview text, we’d often have to look very closely at a line or paragraph of text. What was the context? How had this person described an idea earlier or later in the interview? Sometimes we’d even go back to the audio to better understand the tone of the conversation before we could interpret the meaning of the words we read. We also reflected on our own definitions of codes, and, when needed, we added, altered, and omitted codes from our codebook along the way.

Our system was cyclical. We worked alone, then met together to refine each other’s work, and then split up again. We also returned to interviews multiple times as we clarified our understanding of codes. With each pass through this system, the coded interviews went through phases of increasing clarification.

The resulting products of this collaborative coding system didn’t just include fully coded interviews that were ready for the next step in analysis. Amy and I also emerged from this process with a better mutual understanding of our codes and the ideas they represent. Next, we’ll begin the process of seeking the stories within and between the codes to help illuminate and elaborate upon our emergent findings. Another collaborative process, another design challenge. Stay tuned…

*****

Sarah May is a research assistant for the Agency by Design team and a master’s student at the Harvard Graduate School of Education. Her interest in research and evaluation of informal education spaces and arts-related experiences drew her to the AbD project. Through her work at AbD, she has focused on learning more about the process of qualitative research, as well as absorbing the insights and ideas about maker-centered education that may be relevant to her work in other arts settings.